By Alnoor Ebrahim, Tufts University

OpenAI, the maker of the most popular AI chatbot, used to say it aimed to build artificial intelligence that “safely benefits humanity, unconstrained by a need to generate financial return,” mission statement. But the ChatGPT maker seems to no longer have the same emphasis on doing so “safely.”

While reviewing its latest IRS disclosure form, which was released in November 2025 and covers 2024, I noticed OpenAI had removed “safely” from its mission statement, among other changes. That change in wording coincided with its transformation from a nonprofit organization into a business increasingly focused on profits.

OpenAI currently faces several lawsuits related to its products’ safety, making this change newsworthy. Many of the plaintiffs suing the AI company allege psychological manipulation, wrongful death and assisted suicide, while others have filed negligence claims.

As a scholar of nonprofit accountability and the governance of social enterprises, I see the deletion of the word “safely” from its mission statement as a significant shift that has largely gone unreported – outside highly specialized outlets.

And I believe OpenAI’s makeover is a test case for how we, as a society, oversee the work of organizations that have the potential to both provide enormous benefits and do catastrophic harm.

Tracing OpenAI’s origins

OpenAI, which also makes the Sora video artificial intelligence app, was founded as a nonprofit scientific research lab in 2015. Its original purpose was to benefit society by making its findings public and royalty-free rather than to make money.

To raise the money that developing its AI models would require, OpenAI, under the leadership of CEO Sam Altman, created a for-profit subsidiary in 2019. Microsoft initially invested US$1 billion in this venture; by 2024 that sum had topped $13 billion.

In exchange, Microsoft was promised a portion of future profits, capped at 100 times its initial investment. But the software giant didn’t get a seat on OpenAI’s nonprofit board – meaning it lacked the power to help steer the AI venture it was funding.

A subsequent round of funding in late 2024, which raised $6.6 billion from multiple investors, came with a catch: that the funding would become debt unless OpenAI converted to a more traditional for-profit business in which investors could own shares, without any caps on profits, and possibly occupy board seats.

Establishing a new structure

In October 2025, OpenAI reached an agreement with the attorneys general of California and Delaware to become a more traditional for-profit company.

Under the new arrangement, OpenAI was split into two entities: a nonprofit foundation and a for-profit business.

The restructured nonprofit, the OpenAI Foundation, owns about one-fourth of the stock in a new for-profit public benefit corporation, the OpenAI Group. Both are headquartered in California but incorporated in Delaware.

A public benefit corporation is a business that must consider interests beyond shareholders, such as those of society and the environment, and it must issue an annual benefit report to its shareholders and the public. However, it is up to the board to decide how to weigh those interests and what to report in terms of the benefits and harms caused by the company.

The new structure is described in a signed in October 2025 by OpenAI and the California attorney general, and endorsed by the Delaware attorney general.

Many business media outlets heralded the move, predicting that it would usher in more investment. Two months later, SoftBank, a Japanese conglomerate, finalized a $41 billion investment in OpenAI.

Changing its mission statement

Most charities must file forms annually with the Internal Revenue Service with details about their missions, activities and financial status to show that they qualify for tax-exempt status. Because the IRS makes the forms public, they have become a way for nonprofits to signal their missions to the world.

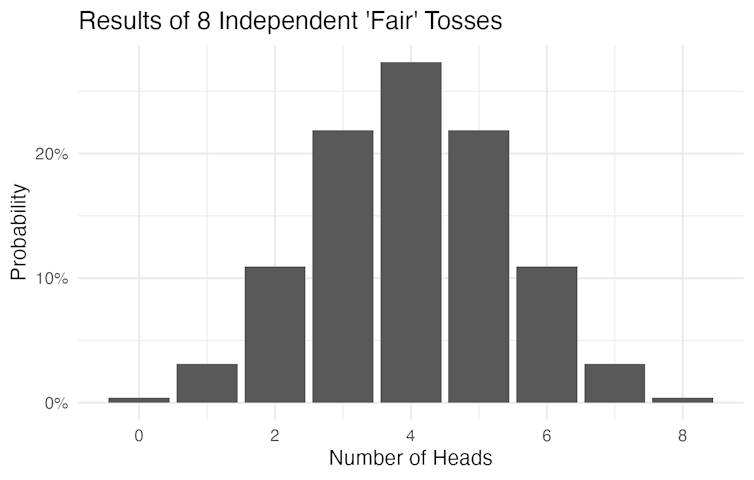

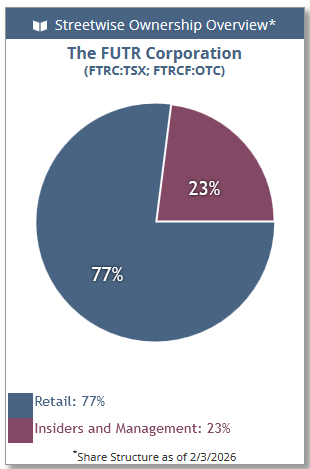

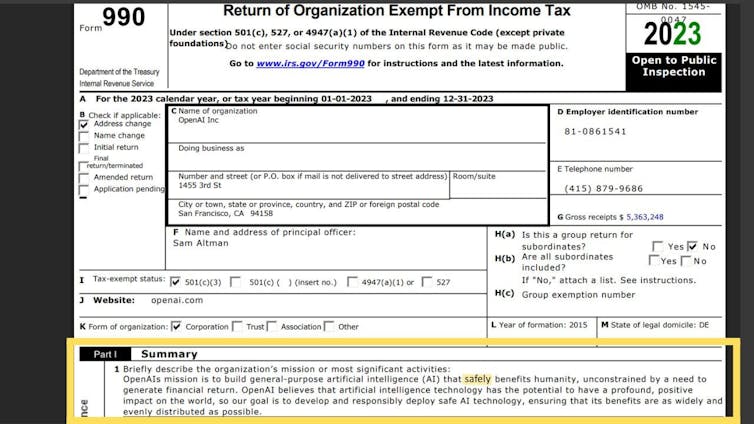

In its forms for 2022, , OpenAI said its mission was “to build general-purpose artificial intelligence (AI) that safely benefits humanity, unconstrained by a need to generate financial return.”

IRS via Candid

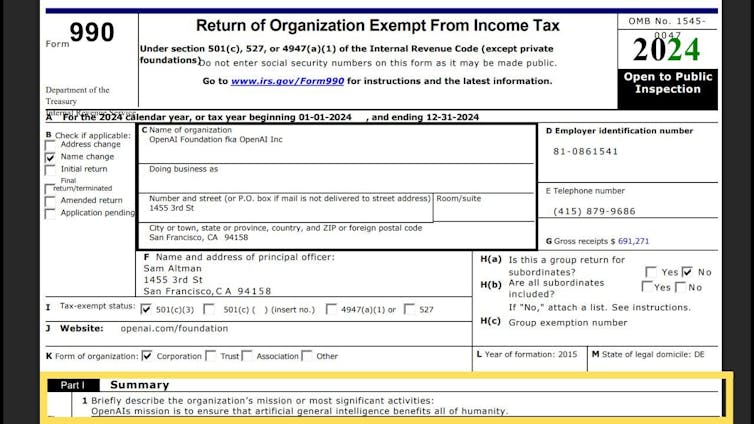

That mission statement has changed, as of – which the company filed with the IRS in late 2025. It became “to ensure that artificial general intelligence benefits all of humanity.”

IRS via Candid

OpenAI had dropped its commitment to safety from its mission statement – along with a commitment to being “unconstrained” by a need to make money for investors. According to Platformer, a tech media outlet, it has also disbanded its “mission alignment” team.

In my view, these changes explicitly signal that OpenAI is making its profits a higher priority than the safety of its products.

To be sure, OpenAI continues to mention safety when it discusses its mission. “We view this mission as the most important challenge of our time,” it states on its website. “It requires simultaneously advancing AI’s capability, safety, and positive impact in the world.”

Revising its legal governance structure

Nonprofit boards are responsible for key decisions and upholding their organization’s mission.

Unlike private companies, board members of tax-exempt charitable nonprofits cannot personally enrich themselves by taking a share of earnings. In cases where a nonprofit owns a for-profit business, as OpenAI did with its previous structure, investors can take a cut of profits – but they typically do not get a seat on the board or have an opportunity to elect board members, because that would be seen as a conflict of interest.

The OpenAI Foundation now has a 26% stake in OpenAI Group. In effect, that means that the nonprofit board has given up nearly three-quarters of its control over the company. Software giant Microsoft owns a slightly larger stake – 27% of OpenAI’s stock – due to its $13.8 billion investment in the AI company to date. OpenAI’s employees and its other investors own the rest of the shares.

Seeking more investment

The main goal of OpenAI’s restructuring, which it called a “recapitalization,” was to attract more private investment in the race for AI dominance.

It has already succeeded on that front.

As of early February 2026, the company was in talks with SoftBank for an additional $30 billion and stands to get up to a total of $60 billion from Amazon, Nvidia and Microsoft combined.

OpenAI is now valued at over $500 billion, up from $300 billion in March 2025. The new structure also paves the way for an eventual initial public offering, which, if it happens, would not only help the company raise more capital through stock markets but would also increase the pressure to make money for its shareholders.

OpenAI says the foundation’s endowment is worth about $130 billion.

Those numbers are only estimates because OpenAI is a privately held company without publicly traded shares. That means these figures are based on market value estimates rather than any objective evidence, such as market capitalization.

When he announced the new structure, California Attorney General Rob Bonta said, “We secured concessions that ensure charitable assets are used for their intended purpose.” He also predicted that “safety will be prioritized” and said the “top priority is, and always will be, protecting our kids.”

Steps that might help keep people safe

At the same time, several conditions in the OpenAI restructuring memo are designed to promote safety, including:

- A safety and security committee on the OpenAI Foundation board has the authority to that could potentially include the halting of a release of new OpenAI products based on assessments of their risks.

- The for-profit OpenAI Group has its own board, which must consider only OpenAI’s mission – rather than financial issues – regarding safety and security issues.

- The OpenAI Foundation’s nonprofit board gets to appoint all members of the OpenAI Group’s for-profit board.

But given that neither the mission of the foundation nor of the OpenAI group explicitly alludes to safety, it will be hard to hold their boards accountable for it.

Furthermore, since all but one board member currently serve on both boards, it is hard to see how they might oversee themselves. And doesn’t indicate whether he was aware of the removal of any reference to safety from the mission statement.

Identifying other paths OpenAI could have taken

There are alternative models that I believe would serve the public interest better than this one.

When Health Net, a California nonprofit health maintenance organization, converted to a for-profit insurance company in 1992, regulators required that 80% of its equity be transferred to another nonprofit health foundation. Unlike with OpenAI, the foundation had majority control after the transformation.

A coalition of California nonprofits has argued that the attorney general should require OpenAI to transfer all of its assets to an independent nonprofit.

Another example is The Philadelphia Inquirer. The Pennsylvania newspaper became a for-profit public benefit corporation in 2016. It belongs to the Lenfest Institute, a nonprofit.

This structure allows Philadelphia’s biggest newspaper to attract investment without compromising its purpose – journalism serving the needs of its local communities. It’s become a model for potentially transforming the local news industry.

At this point, I believe that the public bears the burden of two governance failures. One is that OpenAI’s board has apparently abandoned its mission of safety. And the other is that the attorneys general of California and Delaware have let that happen.![]()

About the Author:

Alnoor Ebrahim, Professor of International Business, The Fletcher School & Tisch College of Civic Life, Tufts University

This article is republished from The Conversation under a Creative Commons license. Read the original article.