By Paul Harrison, Deakin University

In a wave of new ads, brands like Heineken, Polaroid and Cadbury have started hating on artificial intelligence (AI), celebrating their work as “human-made”.

But in these advertising campaigns on TV, billboards on New York streets and on social media, the companies are signalling something larger.

Even Apple’s new series release, Pluribus, includes the phrase “Made by Humans” in the closing credits.

Other brands including H&M and Guess have faced a backlash for using AI brand ambassadors instead of humans.

These gestures suggest we have reached a cultural moment in the evolution of this technology, where people are unsure what creativity means when machines can now produce much of what we see, hear and perhaps even be moved by.

This feels like efficiency – for executives

At a surface level, AI offers efficiencies such as faster production, cheaper visuals, instant personalisation, and automated decisions. Government and business have rushed toward it, drawn by promises of productivity and innovation. And there is no doubt that this promise is deeply seductive. Indeed, efficiency is what AI excels at.

In the context of marketing and advertising, this “promise”, at least at face value, seems to translate to smaller marketing budgets, better targeting, automated decisions (including by chatbots) and rapid deployment of ad campaigns.

For executives, this is exciting and feels like real progress, with cheaper, faster and more measurable brand campaigns.

But advertising has never really just been about efficiency. It has always relied on a degree of emotional truth and creative mystery. That psychological anchor – a belief that human intention sits behind what we are looking at – turns out to matter more than we like to admit.

Turns out, people care about authenticity

Indeed, people often value objects more when they believe those objects carry traces of a person’s intention or history. This is the case even when those images don’t differ in any material way from a computer-generated image.

To some degree, this signals consumers are sensitive to the presence of a human creator, because when visually compelling computer-generated images are labelled as machine-made, people tend to rate them less favourably.

Indeed, when the same paintings are randomly labelled as either “human created” or “AI created”, people consistently judge the works they believe to be “human created” as more beautiful, meaningful and profound.

It seems the simple presence of an AI label reduces the perceived creativity and value.

A betrayal of creativity

However, there is an important caveat here. These studies rely on people being told who made the work. The effect is a result of attribution, not perception. And so this limitation points towards a deeper problem.

If evaluations change purely because people believe a work was machine made, the response is not about quality, it is about meaning. It reflects a belief that creativity is tied to intention, effort and expression. These are qualities an algorithm doesn’t possess, even when it creates something visually persuasive. In other words, the label carries emotional weight.

There are, of course, obvious examples of when AI goes comedically wrong. In early 2024, the Queensland Symphony Orchestra promoted its brand using a very strange AI-generated image most people instantly recognised as unnatural. Part of the backlash, along with the unsettling weirdness of the image, was the perception an arts organisation was betraying human creativity.

But as AI systems improve, people often struggle to distinguish synthetic from real. Indeed, AI generated faces are judged by many to be just as real, and sometimes more trustworthy, than actual photographs.

Research shows people overestimate their ability to detect deepfakes, and often mistake deepfake videos as authentic.

Although we can see emerging patterns here, the empirical research in this area is being outpaced by AI’s evolving capabilities. So we are often trying to understand psychological responses to a technology that has already evolved since the research took place.

As AI becomes more sophisticated, the boundary between human and machine-made creativity will become harder to perceive. Commerce may not be particularly troubled by this. If the output performs well, the question of origin become secondary.

Why we value creativity

But creative work has never been only about generating content. It is a way for people to express emotion, experience, memory, dissent and interpretation.

And perhaps this is why the rise of “Made by Humans” actually matters. Marketers are not simply selling provenance, they are responding to a deeper cultural anxiety about authorship in a moment when the boundaries of creativity are becoming harder to perceive.

Indeed, one could argue there is an ironic tension here. Marketing is one of the professions most exposed to being superseded by the same technology marketers are now trying to differentiate themselves from.

So whether these human-made claims are a commercial tactic or a sincere defence of creative intention, there is significantly more at stake than just another way to drive sales.![]()

About the Author:

Paul Harrison, Director, Master of Business Administration Program (MBA); Co-Director, Better Consumption Lab, Deakin University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

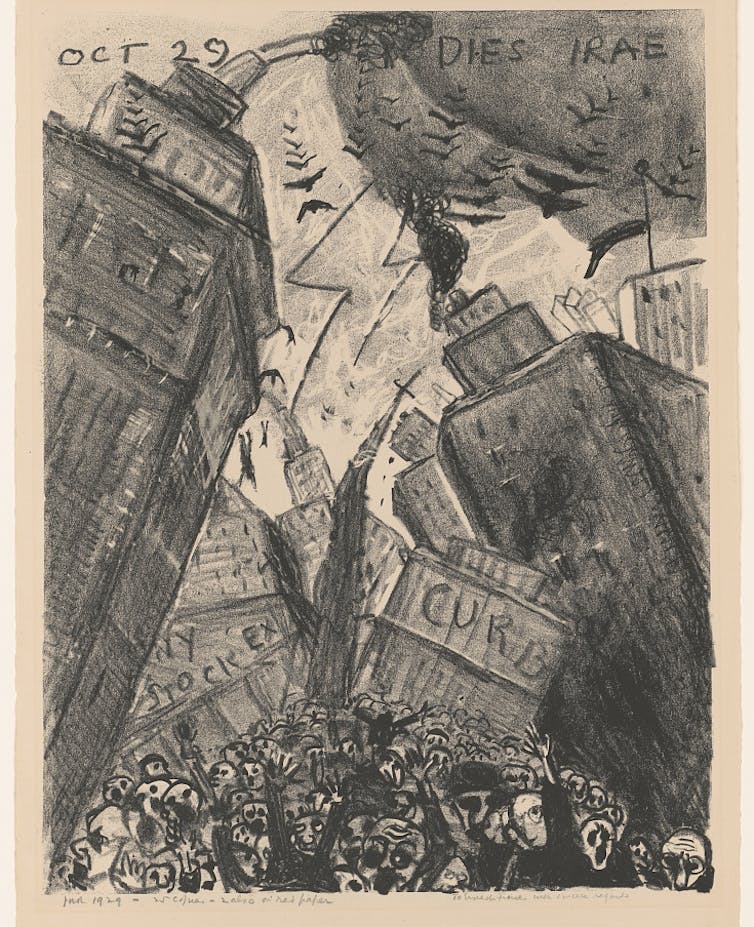

A crowd gathers outside the New York Stock Exchange following the ‘Great Crash’ of October 1929.

A crowd gathers outside the New York Stock Exchange following the ‘Great Crash’ of October 1929.